Prepared by Kristin Liu, Michael E. Anderson, Bonnie Swierzbin, Rick Spicuzza, & Martha L. Thurlow

This document has been archived by NCEO because some of the information it contains is out of date.

Any or all portions of this document may be reproduced and distributed without prior permission, provided the source is cited as:

Liu, K., Anderson, M. E., Swierzbin, B., Spicuzza, R., & Thurlow, M. (1999). Feasibility and practicality of a decision-making tool for standards testing of students with limited English proficiency (Minnesota Report No. 22). Minneapolis, MN: University of Minnesota, National Center on Educational Outcomes. Retrieved [today's date], from the World Wide Web: http://cehd.umn.edu/NCEO/OnlinePubs/MnReport22.html

In the 1997-98 school year Minnesota implemented an annual statewide comprehensive assessment plan. This assessment system includes statewide accountability testing and basic graduation requirements testing. In Minnesota, statewide accountability testing currently takes place in grades three, five, and eight. The tests given at grades three and five are called Minnesota Comprehensive Assessments (MCAs) and cover the areas of reading, mathematics, and writing. The MCAs are given solely as a measure of system accountability. The eighth grade tests are called Basic Standards Tests (BSTs) and have a dual role. Like the MCAs, they are used for statewide accountability, but they are also used as minimum standards that students must pass by twelfth grade in order to be eligible for a high school diploma. The BSTs are made up of tests of reading and mathematics administered in eighth grade and a writing test administered in tenth grade.

In the past, students with limited English proficiency (LEP) often were excluded from large-scale assessments and accountability systems because educators believed it was not in the best interest of students to take the tests, that is, the testing experience would be extremely frustrating and the test results would be invalid or not useful (Lacelle-Peterson & Rivera, 1994; O’Malley & Valdez Pierce, 1994; Rivera & Vincent, 1996). However, many educational researchers and policymakers now believe that LEP students should be included in these assessments to the maximum extent practical so that the needs of these students are not ignored (Lacelle-Peterson & Rivera, 1994; O’Malley & Valdez Pierce, 1994; Zehler, Hopstock, Fleischman, & Greniuk, 1994). In 1998, roughly 88% of eighth grade LEP students in Minnesota were included in the BST (Liu & Thurlow, 1999), and a similar percentage of LEP students in grades 3 and 5 participated in the MCAs.

Minnesota has developed guidelines for the use of accommodations for students with limited English proficiency on large-scale assessments. These guidelines are now available for both the Minnesota Basic Standards Tests and the Minnesota Comprehensive Assessments. Written guidelines have been distributed to schools across the state and can be found on the Department of Children, Families, and Learning (CFL) Web site. By themselves, however, written guidelines in standard text format have not been sufficient to help LEP students, who along with their parents, teachers, and administrators, must make informed decisions about whether they should participate in testing and what accommodations should be used. In a survey of English as a second language and bilingual educators across forty-five Minnesota schools, researchers asked how test participation decisions were made for LEP students (Liu, Spicuzza, Erickson, Thurlow, & Ruhland, 1997). Findings indicated that a lack of information flow in large urban districts was preventing ESL and bilingual educators from obtaining the knowledge they needed to be a part of the decision making process for their own students. In spite of repeated training efforts by the Minnesota Department of Children, Families and Learning and the existence of written guidelines, these respondents from urban districts often did not know who made participation and accommodation decisions, and were unfamiliar with the test accommodations and modifications available to LEP students. For each test cycle there were revised guidelines and educators had difficulty keeping track of new information as it was updated. Some respondents mentioned that they had seen early copies of testing guidelines in which some allowable accommodations had not been mentioned. They expressed frustration with the lack of knowledge about who should make participation decisions, about allowable accommodations and modifications, and about which guidelines were current.

Keeping mind the clear need for so much readily accessible information as possible, researchers from the Minnesota Assessment Project, a four-year, federally funded effort awarded by the United States Department of Education, Office of Educational Research and Improvement, designed a decision making tool to inform English as a Second Language (ESL) and bilingual educators of the most up-to-date participation and test accommodation guidelines and to assist school staff in the process of making informed decisions with students and their families. In order to make the tool interactive and widely available, it was designed to be used with a Web browser from a World Wide Web site or from a computer diskette. This report describes the decision making tool and reports on the results of a study that examined the tool’s feasibility.

Background

Large-scale assessments, and in particular high stakes graduation tests such as Minnesota’s Basic Standards Tests (BSTs), are becoming more common throughout the United States. The new Title I legislation requires the participation of all students, including those with limited English proficiency, in large-scale assessments for the purpose of measuring students’ progress toward state standards. It also supports the development of appropriate test adaptations for these students (August & Hakuta, 1997). According to August and Hakuta (1997), without a range of test accommodations and modifications that are specific to LEP students, many of these students will not be able to participate in the testing or receive services.

When test accommodations and modifications are available for LEP students, the involvement of ESL personnel, bilingual educators, and other knowledgeable people is required to match the right testing conditions to a particular student. Sometimes the best match for a particular student may be to recommend that he or she not be tested until his or her English language is more fully developed. An important part of making appropriate participation and accommodations recommendations for LEP students is having a set of stable testing guidelines that educators can refer to so that decisions are made on a consistent basis across students, schools, and districts. However, as recently as 1996, many states did not have written guidelines addressing the participation of LEP students in large-scale assessments and how to make appropriate accommodations decisions (Thurlow, Liu, Erickson, Spicuzza & El Sawaf, 1996). Those states that did have policies often grouped information about students with disabilities and LEP students, making it difficult to know what information applied to which students. Minnesota is one of the few states that has written test guidelines that are specific to LEP students and the BSTs in reading, mathematics, and writing. There are also guidelines available for the MCAs. Both sets of guidelines have been available primarily in paper format and are distributed to schools.

Creation of a Decision Making Tool

Guidelines for the inclusion and use of accommodations with LEP students and students with disabilities are available from the Minnesota Department of Children, Families and Learning (CFL); however, for some educators a paper format is difficult for understanding the complexities of the decision making process. The purpose of this project was to design a presentation that breaks the guidelines up into smaller pieces of text. There are two reasons to simplify the presentation of the guidelines. First, the new presentation enables someone who is not an expert in assessment to use the decision making process for planning. Second, sequenced guidelines can help parents, teachers, administrators, and students work together in order to make the best participation decisions for the student.

Other issues related to using a paper format for the assessment guidelines surface when discussing accommodation decisions for statewide testing with teachers. First, the Minnesota graduation rule has generated many memos and information reports, and a specific guideline easily gets lost or misplaced before the administrator or teacher receiving the information can plan how to use the news. Therefore, the assessment guidelines are not necessarily available or ready to use when needed. Second, it is difficult to keep documents current and to ensure that everyone involved in making participation decisions is using the same information. For example, a familiar administrator is an allowable accommodation for LEP students taking the Basic Standards Test, but this accommodation has not been listed in the paper guidelines (Liu, Spicuzza, Erickson, Thurlow, & Ruhland, 1997).

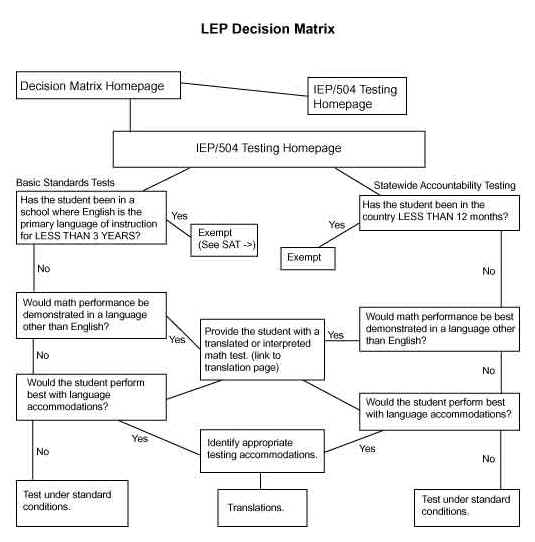

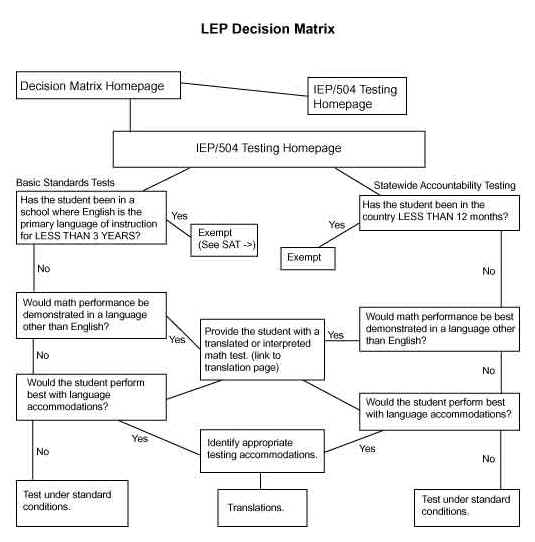

In order to mitigate these difficulties and to make the decision making process more efficient, a decision making tool, containing the guidelines and a decision matrix (see Appendix A), was designed using the World Wide Web. This tool was created in a Web-based format because the complexities of test decision making can be presented in an understandable and manageable manner. The Web allows for a combination of graphics and text, and uses tools that enable the decision matrix to be broken down into a series of questions that need to be considered during a planning discussion.

A format was needed that would be easily accessible to all people who might be making testing decisions. This includes teachers, administrators, parents, and students. While the availability of computer technology and access to the World Wide Web varies greatly from district to district, and even from building to building and home to home, most schools and families have some access to the Web or will in the near future. A paper format of guidelines will always be needed; however, the Web-based version of the guidelines has several advantages. With the Internet, decision making tools such as this can be made available to everyone in the state and changes in the process can be made instantly since everyone is accessing the same copy. Using the Internet also allows teachers and parents to have access from home or other community sites.

It should also be noted that one does not have to be connected to the World Wide Web in order to use the decision making tool; access to a Web browser (such as Netscape or Microsoft Explorer) is all that is needed. If one has a Web browser, but is not connected to the Web, the decision tool can be run from a diskette. In fact, this is how some of the people who participated in the field test of the tool had access. This format does not allow the diskette copy to be instantly updated, but it does give educators and parents access to the decision making tool.

Finally, the Web tool was designed to be simple in terms of graphics and other features. This allows for quick access, making it easy to maneuver through the site. The design is also straightforward. Since educational teams are often making decisions with several students, the decision matrix is short and simple. People with varying technological backgrounds can become familiar with the tool very quickly.

The Decision Making Tool

The decision making tool used in this study is an electronic version of the Minnesota guidelines for making test participation decisions plus a decision matrix (see Appendix A for an overview of the matrix). The address for the decision making tool is http://www.cehd.umn.edu/NCEO/map/. The decision matrix has two main branches, one for students with limited English proficiency and another for students with disabilities; both are centered on making decisions about inclusion and the use of accommodations in statewide testing. The first page of the decision making tool ends with this statement and decision request.

This web site was designed to help parents and educators make decisions about student participation in different forms of statewide testing. Follow the links below to a decision matrix for Limited English Proficient (LEP) students or students with IEP or 504 plans.

The Web site design has two branches of decision making to reflect the dual role of the Basic Standards tests within each of the LEP and IEP/504 plan branches—one for statewide accountability testing for grades three, five, and eight, and one for graduation requirements testing for grades eight and beyond. The reason for having these two lines of decision making is that the decision may vary according to the reason a student is taking a test, either for statewide accountability or individual achievement for graduation. The second page for both the LEP and IEP/504 branches ends with the following statement and decision request.

Follow the links below to see if a student should participate in either form of testing and what kinds of accommodations are available for each test.

Graduation Standards Statewide Accountability Testing

As the user enters the decision making matrix, a question is posed about the student for which the test participation decision is being made. The person using the decision matrix responds to this question by clicking on the appropriate answer, LEP or IEP/504. As a result, the user is given information and then asked the second question about which type of test a decision is being made for. Next, the user is asked about student status to help determine whether the student should be included in the standardized test. If the student should be included, the user is given information about accommodations; if not, the user is given information on alternate forms of assessment. At any point in the process, the user can go back a step or to the beginning of the decision matrix. In the end, the user is provided with guidance on how the student could be included in the standardized test so that the student can best demonstrate knowledge within the state guidelines.

To determine whether a computer-based decision making tool is useful for people involved in making decisions about the participation of LEP students in the Basic Standards Tests and Minnesota Comprehensive Assessments, a survey was developed for educators and administrators who participate in the decision making process. Survey response formats included closed response and open-ended response (see Appendix C).

In December 1998 and January 1999, a total of 102 surveys were sent to seven districts across the state of Minnesota. These districts were chosen from a larger set of districts that had previously agreed to work with CFL during the development and administration of the BSTs. The chosen districts have relatively large English as a Second Language (ESL)/Bilingual Education programs. Some districts that had been recommended were not able to participate because of a lack of the computer resources needed to use the decision making tool.

The CFL classifies all districts in the state of Minnesota into one of four categories based on size and location. The categories are:

• Cities of the First Class (large, urban school districts)

• Suburban-Metro

• Greater Minnesota > 2000 (rural districts with more than 2,000 students)

• Greater Minnesota < 2000 (rural districts with fewer than 2,000 students)

Three of the districts included in this study were in greater Minnesota with a population over 2,000 students, and four districts were in suburban-metro areas. Urban districts were not included because in many cases, they have too many students to make decisions individually using the decision making tool.

The ESL coordinator in each of the selected districts was sent the World Wide Web address of the decision making tool as well as a copy of the tool on a floppy disk, formatted for Macintosh or IBM PC-compatible computers, whichever was appropriate for their district. Thus, survey respondents had two ways to access the tool: through the Web site if they had Internet access, or from the disk if they had a Web browser but not Internet access.

For each district, the ESL coordinator received 16 surveys to distribute plus one extra survey for making copies if needed. The ESL coordinator was asked to distribute the surveys to those educators and parents who were making decisions about the participation of LEP students in the Basic Standards Tests and Minnesota Comprehensive Assessments. After surveys were completed, the coordinator was asked to collect the surveys and send them to NCEO for analysis.

In addition to the surveys distributed through school districts, a shorter survey was made available to participants in the 1999 Statewide LEP Conference who would examine the tool during the conference (see Appendix C). Respondents who completed surveys at the conference were from four greater Minnesota districts with a population under 2,000 students and one suburban-metro district.

Once surveys were returned, they were numbered and all quantitative responses were entered into a computer database for analysis. For the qualitative responses, one member of the research team used an inductive qualitative research technique to develop a coding system as described by Bogdan and Biklen (1992). All of the qualitative data was reviewed holistically for regularities and themes. A system of coding categories based on the themes was developed and verified; all of the participants’ responses were clustered under these categories.

A total of 12 surveys (11.8%) were returned from 4 of the 7 districts (57%). The individual return rate of 11.8% was very low; however, extra surveys had been sent to each coordinator, who also had the option of distributing fewer surveys than were sent or copying the surveys and distributing more. In addition, a number of respondents used the tool to make decisions for more than one student before completing the survey. An additional 5 shorter surveys were returned, for a final total of 17 surveys.

Respondents were primarily ESL or Bilingual Education teachers. In addition, two district ESL coordinators and one administrator completed the survey. Table 1 shows the breakdown of respondents by position. Data from surveys were both quantitative and qualitative. The quantitative data are addressed first.

Availability and Familiarity of Computer Technology

Of the seven districts that were chosen to participate in the study, one (14%) was unable to do so because potential participants lacked the computer technology to use the decision making tool; that is, there was no computer available where the decision making process took place or the computer that was available lacked the software necessary to run the decision making tool. Another district was loaned a Macintosh laptop computer by the Minnesota Assessment Project in order to be able to use the tool and complete the survey.

Most of the respondents (70%) used the tool on a Macintosh computer, another 24% used the tool on a PC, and one respondent (6%) reviewed a printed version of the tool. Respondents were asked about their familiarity with computers in general, with the specific type of computer they used to look at the tool, and with the Internet. More than half of the respondents (53%, 9 of 17 responses) indicated they were very familiar with computers in general; another 41% (7 of 17) were somewhat familiar with them, and one respondent was a little familiar with computers before using the decision making tool. Of the 16 respondents who used the tool on a computer, 63% were very familiar with the type of computer they used the tool on, and 37% were somewhat familiar with it. Of these same 16 respondents, 56% were very familiar with the Internet and 44% reported being somewhat familiar with it. All 16 respondents who used the decision making tool on a computer reported having the computer skills needed to use the tool.

Overall, 82% of the respondents (14 of 17) reported that they have access to a computer at home. Of these respondents, 93% (13 of 14) indicated that they have Internet access at home. All 17 of the respondents indicated that they have computer access at school and all but one has Internet access there.

On the longer version of the survey, which was sent to the school districts, respondents were asked what Internet browser they have access to. Seven of the nine (78%) respondents who have Internet access at home use Netscape Navigator while the remaining 22% have Microsoft Explorer. Of the 11 respondents who have Internet access at their schools, 8 (73%) have only Netscape Navigator, 1 (9%) has only Microsoft Explorer, and 2 (18%) have both.

Table 1. Positions Held by Survey Respondents

| Positions | No. of Respondents | % Holding This Job |

| ESL/Bilingual Education Teacher | 14 | 82% |

| District ESL Coordinator | 2 | 12% |

| Administrator | 1 | 6% |

| Total | 17 | 100% |

Participation Decisions

Respondents were asked who in their school district usually makes decisions about the participation of LEP students in Basic Standards Tests and Minnesota Comprehensive Assessments. Ten respondents (58%) indicated that some type of committee makes the decision. All of these committees include the ESL teacher, some include the classroom teacher, district ESL coordinator, and administrator; however, only 30% (3 of 10) include the LEP student’s parents and none includes the LEP student. The second most common response after "committee decision" was "individual ESL or Bilingual Education teacher decision" with 29% of responses (5 of 17) falling in this category. One respondent (6%) indicated that the district ESL coordinator usually makes participation decisions and another one respondent (6%) did not have an answer for this item.

Overall, 58% (10 of 17) of the respondents said that participation decisions in their districts were made for individual LEP students on a case-by-case basis. Another 18% (3 of 17) said that participation decisions said that decisions were made for the LEP students as a whole. One respondent (6%) marked both individual and as a whole, and an additional three respondents (18%) did not answer this question.

Table 2. Respondent's Familiarity with Computer Technology

| Computers in General | Type of Computer | Internet | |

| Very Familiar | 9 | 10 | 9 |

| Somewhat Familiar | 7 | 5 | 7 |

| A Little Familiar | 1 | 1 | 0 |

| Not at all Familiar | 0 | 0 | 0 |

Using the Decision Making Tool

Of the 12 respondents who reviewed the computer-based tool in the school districts, 9 (75%) looked at it as part of a decision making group. Another two respondents (17%) looked at the computer-based tool alone, and one respondent reviewed a paper version. All five of the respondents who reviewed the computer-based tool at the Statewide LEP Conference did so alone.

Respondents in the school districts were asked how many students they used the tool to make participation decisions for. Responses to this question varied from 33 ("all the students I work with") to 0 (respondents reviewed the tool but did not to make a decision using it). Table 3 shows the breakdown of respondents by the number of students for which they made decisions using the tool.

These respondents were also asked approximately how long it took for them to go through the tool for the first student for which they used the tool and the last student for which they used the tool. For the first student, the respondents’ answers ranged from one to thirty minutes while for the last student, they ranged from two to fifteen minutes (see Table 4). The average time to use the tool for the first student was 11.25 minutes, and the average for the last student was 6.7 minutes. In all but two situations, the time needed to use the tool decreased by half or more from the first to the last use.

Overall, 82% (14 of 17) of respondents thought the format of the computer-based tool was easy to understand. Another 12% (2 of 17) thought that it was not easy to understand; one of these respondents commented, "I would have liked to have been walked through this...Make it very easy & very elementary for those of us that are not familiar with this." One respondent (6%) used a paper version of the tool and therefore did not comment on the ease of understanding the computerized tool. Respondents were also asked whether the tool was easy to use. A large majority (15 of 17, 88%) thought it was easy to use. One respondent (6%) did not think so, and commented, "I’m not sure if I have seen all the parts." Again, one respondent did not comment on ease of use.

Table 3. Amount of Tool Usage

| No. of Students | No. of Respondents | % of Respondents |

| 33 | 1 | 8% |

| 5 or more | 3 | 25% |

| 2 | 2 | 17% |

| 0 | 3 | 25% |

| Conflicting Information | 1 | 8% |

| No Answer | 2 | 17% |

Respondents were asked whether they found the tool helpful in (1) increasing the awareness of testing options for LEP students, and (2) making careful individual decisions. Eleven of seventeen respondents (65%) thought it was helpful in increasing their awareness, one respondent (6%) did not, and the remaining five (29%) did not answer the question. Eleven respondents (65%) also thought that the tool was helpful in making careful decisions while six respondents (35%) had no answer to this question. When asked whether they would use the tool again to make participation decisions for LEP students, 59% (10 of 17) respondents said that they would do so; one commented, "Especially to determine accommodations." Another 6% (1 of 17) said that it would not be used it again, commenting, "I think I understand how to figure it out without the computer." A further 29% (5 of 17) were not sure whether they would use it again. They offered various reasons for their uncertainty: One cited a lack of technology, two said they were already familiar with the participation guidelines, one expressed a desire to see the information on paper, and one was an administrator who said that teachers normally make the participation decisions. In addition, 6% (1 respondent) did not answer this question. Ten respondents (59%) said they would recommend the tool to others who are involved in participation decisions. Three respondents (18%) were unsure whether they would recommend it; one of this group said, "It was helpful in deciding accommodations but I could follow the same train of thought on my own," and another commented that the recommendation would depend on staff familiarity with graduation standards. One respondent was unsure about recommending the tool because of a lack of experience with it. Another 23% (4 of 17) did not provide an answer for this question.

Table 4. Time Neede to Use the Tool

| Minutes for First Student | Minutes for Last Student | No. of Respondents |

| No Response | No Response | 2 |

| 1 | No Response | 1 |

| 2 | No Response | 1 |

| 4.5 | 2 | 1 |

| 10 | 2 | 1 |

| 10 | 5 | 2 |

| 10 | 8 | 1 |

| 10 | 15 | 1 |

| 25 | 10 | 1 |

| 30 | No Response | 1 |

Note: Seven respondents reported using the decision making tool for mor ethan one student

In addition to the quantitative results discussed above, some comments were written in answer to open-ended questions as well as in the margins of the surveys. A list of the comments was compiled and then separated into the following topic categories:

1. Clarity of information in the tool

2. Usefulness of the tool

3. Timing of information

4. Amount of information in the tool

5. Technology issues

6. Testing process issues

7. Clarity of the survey

8. Miscellaneous

Major themes of each category are mentioned below. (For a complete list of comments, see Appendix D.)

Usefulness of the tool.

Some of the respondents thought the tool was useful in the decision making process, others

believed that they were familiar enough with the state guidelines to make decisions

without a tool, and still others thought that they needed the information provided by the

tool but wanted to see it on paper.

Timing of information.

There was concern expressed about having information about accommodations early enough so

that appropriate materials could be ordered for testing. A respondent said, "This

tool would have helped me more if I could have used it early enough to order the

accommodations my students would benefit from. We had to order earlier in the year–so

now I have extra math cassettes but no math translations which would have been better for

some of my students."

Amount of information in the tool.

A respondent commented that "familiar examiner" should be listed as a possible

accommodation. Another suggested printing a record of the decision at the end of the

process.

Technology issues.

Issues in this category included inability to access the tool, readability (font size),

and how one determines when all the necessary material in the tool has been read.

Testing process issues.

This category included questions about the availability of translated mathematics tests

and how students’ tests are identified as "LEP." There was also concern

expressed about the unspoken time limit on tests when LEP students are tested in the same

room as mainstream students.

Clarity of the survey.

Some respondents were confused by the fact that the tool refers to Basic Standards Testing

and Statewide Accountability Testing while the survey uses both those terms and the

acronyms BST and MCA.

Miscellaneous.

Respondents suggested other uses of World Wide Web technology, including a statewide

teachers’ discussion page or chat line.

The decision making tool was developed to make the process of making decisions about accommodations and modifications for LEP students in Basic Standards Testing and Statewide Accountability Testing easier to understand. Further, it was designed to make the information needed to make such decisions accessible to those involved in the decision making process.

The decision making tool is efficient.

ESL and Bilingual Education professionals reported that the decision making tool was

simple, clear, and easy to use. These are indicators that the tool as developed was

efficient. It appears that the decision making tool was helpful in presenting the critical

information and questions for participation and test accommodations. This would seem

logical since the tool was designed to include the CFL guidelines for determining level of

participation and accommodations for LEP students. This indicates that the tool

successfully communicates the CFL guidelines in a user-friendly manner. After the initial

investigation of the decision making tool, respondents reported that an average of less

than 7 minutes was needed to use the tool for a student, another indicator that the tool

is efficient.

The decision making tool and paper guidelines are

complementary.

In general, respondents found the tool useful, but there were also a significant number of

comments indicating that people want to see information on paper. In addition to

individual differences in preferred communication style, guidelines on paper as part of a

Web-based tool have complementary advantages. A respondent’s comment, "I’m

not sure if I have seen all the parts," indicates the difficulty of navigating a Web

site compared to reading instructions on paper from beginning to end. Paper guidelines

have the advantage of a familiar, comfortable format while Web-based guidelines have an

advantage in that they can be updated instantly, ensuring that all participants in the

decision making process have the same and the most current information. Some respondents

suggested another way to use paper and the decision making tool in a complementary fashion

by enhancing the tool by adding a printout at the end of the decision making process

showing the choices that had been made at each step of the process and the final decision

for the student in question.

ESL educators lack important information for decision

making.

A number of comments indicated that ESL and Bilingual Education teachers and

administrators are still struggling with obtaining the knowledge they need to be part of

the decision making process. For example, one respondent commented on confusion in

distinguishing between Basic Standards Testing and Statewide Accountability Testing.

Another commented that the tool needs to explain the difference between accommodations and

modifications. Other respondents reported having questions about translations of

mathematics tests, the Basic Standards Writing Test, and how LEP students’ scores are

identified as LEP scores.

In summary, when testing accommodations are made available so that as many students as possible can meaningfully participate in an assessment, it is important to have efficient and accessible guidelines available for the people making accommodation decisions. These people may include administrators, teachers, counselors, parents, and students. When testing decisions are being made on an individual basis, guidelines for making these decisions need to be clear so that decisions are made fairly and accommodations are used to help make the tests more accessible to the student. When students receive the accommodations they need, the validity of the test results increase.

At the present time, most states do not have a decision making process in place that will walk people through this often complicated process. The decision making tool designed for educators in Minnesota and available through the World Wide Web is an attempt at helping streamline this process. Although the tool may not be accessible to some due to technological availability, it does offer many benefits. Most ESL professionals found it to be clear and useful. As technology increases in schools and homes around the state, this tool should become more accessible. The decision making tool is efficient, taking a brief amount of time, and thorough. Using the information gained through the survey data contained in this report, the decision making tool can be improved so that it is even more useful to educators.

August, D. & Hakuta, K. (1997). Improving schooling for language-minority children. Washington, D.C.: National Academy Press.

Bogdan, R. & Biklen, S. (1992). Qualitative research for education. Boston: Allyn and Bacon.

Lacelle-Peterson, M. & Rivera, C. (1994, Spring). Is it real for all kids? A framework for equitable assessment policies for English language learners. Harvard Educational Review, 64 (1), 55-75.

Liu, K., Spicuzza, R., Erickson, R., Thurlow, M., & Ruhland, A. (1997, October). Educators’ Responses to LEP students’ participation in the 1997 Basic Standards Testing (Minnesota Report 15). Minneapolis, MN: University of Minnesota, National Center on Educational Outcomes.

Liu, K. & Thurlow, M. (1999). Limited English Proficient Students’ Participation and Performance on Statewide Assessments: Minnesota Basic Standards Reading and Math, 1996-1998 (Minnesota Report 19). Minneapolis, MN: University of Minnesota, National Center on Educational Outcomes.

O’Malley, J. M. & Valdez Pierce, L. (1994). State assessment policies, practices, and Language minority students. Educational assessment , 2 (3), 213-255.

Rivera, C. & Vincent, C. (1996). High school graduation testing: Policies and practices in the assessment of LEP students. Paper presented at the Council of Chief State School Officers, Phoenix.

Thurlow, M., Liu, K., Weiser, S., & El Sawaf, H. (1997). High school graduation requirements in the U.S. for students with limited English proficiency (Minnesota Report 13). Minneapolis, MN: University of Minnesota, National Center on Educational Outcomes.

Zehler, A. M., Hopstock, P. J., Fleischman, H. L., & Greniuk, C. (1994, March 28). An examination of assessment of limited English proficiency students [On-line]. Task Order D070 Report. Arlington, VA: Special Analysis Center. Available: http://www.ncbe.gwu.edu/miscpubs/siac/lepasses.htm.

Overview of Decision Matrix

Minnesota Assessment Project Decision Making Tool: Graduation Standards and Statewide Accountability Testing

http://www.cehd.umn.edu/NCEO/MAP/LEP1.html

Computer-Based Decision Making Tool Survey

Questions marked with an asterisk were on the short version of the survey.

Terms: BST = Basic Standards Tests (grade 8+)

MCA = Minnesota Comprehensive Assessments (grades 3 & 5)

Part I: Tell us about yourself

*1. Who are you? (Please check one.)

a. ___ District LEP coordinator d. ___ administrator b. ___ ESL teacher/Bilingual Ed teacher c. ___ regular classroom teacher e. ___ other (please describe) _______________________

_______________________

*2. Is your school district: (Please check one.)

a. ___ urban (e.g., Minneapolis, St. Paul, Duluth)

b. ___ suburban-metro (e.g., Bloomington, Eagan, Roseville)

c. ___ a greater Minnesota district with more than 2,000 students (e.g.,

Rochester, St. Cloud, Bemidji)

d. ___ a greater Minnesota district with fewer than 2,000 students (e.g.,

Mountain Lake, Owatonna, Windom)

3. How did you look at the Decision Making tool ? (Check one.)

a. ___ on computer; what kind? __________________________________

b. ___ on paper

c. ___ I never looked at it (Another person used the tool.)

*4. How familiar were you with the following equipment before you used the Decision Making tool?

a. A computer in general (circle one number choice)

1. not at all familiar 3. somewhat familiar

2. a little familiar 4. very familiar

b. The specific type of computer (IBM, Macintosh) you used to look at the tool

(Circle one number choice.)

1. not at all familiar 4. very familiar

2. a little familiar 5. I didn’t look at it on a computer

3. somewhat familiar

c. The Internet (Circle one number choice.)

1. not at all familiar 4. very familiar

2. a little familiar 5. I didn’t look at it on a computer

3. somewhat familiar

*5. Did you have the computer skills you needed to use the Decision Making tool? (Check one.)

a. ___ Yes

b. ___ No; please explain _______________________________________

c. ___ I didn’t look at it on a computer (Someone else used the tool.)

*6. Do you have access to a computer in the following places? (Check one answer for each letter.)

a. at home

1. ___ yes If yes, do you have Internet access? a. ___ yes

b. ___ no

2. ___ no

b. at school

1. ___ yes If yes, do you have Internet access? a. ___ yes

b. ___ no

2. ___ no

7. If you answered yes to any of the parts of question 6, which Internet browsers (e.g., Netscape Navigator, Microsoft Explorer) are available on this machine?

a. (home)

b. (school)

c. ___ I don’t have access to a computer

Part 2: Tell Us About the Decision Making Tool

*8. In your school or district, who usually makes decisions about the participation of LEP students in Basic Standards Tests and Minnesota Comprehensive Assessments? (Check only one.)

a. ___ the district LEP coordinator

b. ___ an individual ESL or Bilingual teacher

c. ___ an administrator

d. ___ a group of educators; please describe

e. ___ other; please describe

*9. Does the person or people making testing decisions for LEP students usually make decisions: (Check one.)

a. ___ for all of the LEP students in the school/district as a whole

b. ___ for individual LEP students on a case-by-case basis

10. When you looked at the tool, were you: (Check one.)

a. ___ alone

b. ___ part of a group

c. ___ I never looked at the tool (Someone else used the tool.)

11. For how many students did you use the tool to help you make Basic Standards Tests and Minnesota Comprehensive Assessment participation decisions? (Check one.)

a. ___ zero d. ___ three g. ____ all of the students I work with; How

b. ___ one e. ___ four many?______

c. ___ two f. ___ five or more

12. How many minutes did it take for you to go through all the parts of the tool and make decisions for the first student for which you used it? (Fill in the blank with an approximate number of minutes, or fill in "NA" if you did not use the tool.)

__________ minutes to go through it for the first student

13. If you used the tool for more than 1 student, how many minutes did it take for you to go through all the parts of the tool for the last student for which you used it? (Fill in the blank with an approximate number of minutes, or fill in "NA" if you did not use the tool.)

__________ minutes to go through it for the last student

*14. Was the format of the tool easy to understand? (Check one.)

a. ___ yes

b. ___ no; why not? ___________________________________________

c. ___ I never looked at the Decision Making tool. (Someone else used the tool.)

*15. Was the tool easy for you to use?

a. ___ yes

b. ___ no; why not? ___________________________________________

c. ___ I never looked at the Decision Making tool. (Someone else used the tool.)

*16. What additional information about participation in Basic Standards Tests and Minnesota Comprehensive Assessments would you like to see included in this tool? (Write your answer below.)

*17. Was this tool helpful in doing the following? (Check one option for each.)

a. Increasing your awareness of testing options for LEP students?

1. ___ yes

2. ___ no

b. Making careful individual student decisions?

1. ___ yes

2. ___ no

*18. In the future, would you use this tool again to make BST and MCA participation decisions for LEP students?

a. ___ yes

b. ___ no; why not? ___________________________________________

c. ___ I’m not sure; why?

*19. Would you recommend this tool to others making these decisions?

a. ___ yes

b. ___ no; why not? ___________________________________________

c. ___ I’m not sure; why?

*20. How can this tool be improved so it is more useful for educators making decisions about the participation of LEP students in BSTs and MCAs? (Please write your thoughts below.)

21. What questions do you still have about the participation of LEP students in the BST and MCAs? (Please write your thoughts below.)

Comments from Survey Respondents

Clarity of information in tool

1. It’s fine.

2. You might want to distinguish between modifications and accommodations somewhere in the tool. It was very clear that accommodations were the focus, but I couldn’t find where "Modification to the standard" was explained (the reference was taken from http.../NCEO/MAP/LEP1.html)

3. I liked the beginning explanation. I think it’s confusing to be discussing Basic Standards testing & Statewide Accountability Testing. It seems that they could have been given better names. One is never sure which is which. It’s confusing.

4. The language is too complicated and lengthy to be used in a meeting with an LEP parent.

5. Information about students who have been in the English setting for less than 3 years, but do have language skills in English to try the test (BST). It led me to the dead end of exempt without further questions.

6. I would have liked to have been walked through this. #1, #2, #3, etc. Make it very easy & elementary for those of us that are not familiar with this.

7. Not for a LEP student.

8. Good information.

9. It’s OK.

Usefulness of the tool

1. (I would use it again) especially to determine accommodations.

2. I think I understand how to figure it out without the computer.

3. I might (use the tool) for certain students, but I’m already familiar with the state guidelines.

4. I think I could make decisions if I had it in writing on paper. Maybe easier for me.

5. Maybe (would use the tool again)–but most of the information needed I know & would not need to go through a flow chart.

6. It was helpful in deciding accommodations but I could follow the same train of thought on my own to decide whether exemption or taking the test should be done.

7. Depends on staff familiarity with graduation standards

8. I will also share it with secondary counselors.

Timing of information

1. This tool would have helped me more if I could have used it early enough to order the accommodations my students would benefit from. We had to order earlier in the year–so now I have extra math cassettes but no math translations which would have been better for some of my students.

Amount of information in the tool

1. Start listing "familiar examiner" as a possibility, even if it is already "legal." Many administrators would be relieved to have us do the testing and it would benefit the kids.

2. Add a means to print a piece of paper at the end with the student’s name and what decision was made.

Technology issues

1. Larger font?

2. Thank you for not including images (longer-downloading)

3. I could not access this tool through my computer at home. See attached paper. (Attached paper shows respondent tried to search for http://www.cehd.umn.edu/NCEO/map through Yahoo!)

4. I’m not sure if I have seen all the parts.

5. Don’t have internet to use it on yet.

Testing Process issues

1. What is the process for arranging LEP students’ written compositions to be evaluated as LEP students?

2. For LEP students in math–If student needs a translation that is not Spanish, Hmong or the other one done by the state, will the state arrange a translated copy? Russian? Farsi? German?

3. Can LEP students’ scores be identified as LEP scores even when they take the test without accommodations? It would be useful to see how all my LEP students perform on the test whether they took it with or without accommodations.

4. Issue of unspoken time limit when LEP students are testing with mainstream students

Clarity of the survey

1. Why call the tests BSTs and MCAs when you have Basic Standards and Statewide Accountability Testing as headings on the chart of Exemptions Permitted. Are MCAs the same as Statewide Accountability Testing?

Miscellaneous

1. What to do for students who do poorly–

– set up a discussion page where teachers can ask questions and others can reply with suggestions

Check out discussion page at eslcafe.com

– maybe a statewide teacher chat line