Technical Report 67Using Cognitive Labs to Evaluate Student Experiences with the Read Aloud Accommodation in MathSheryl S. Lazarus • Martha L. Thurlow • Rebekah Rieke • Diane Halpin • Tara Dillon September 2012 All rights reserved. Any or all portions of this document may be reproduced and distributed without prior permission, provided the source is cited as: Lazarus, S. S., Thurlow, M. L., Rieke, R., Halpin, D., & Dillon, T. (2012). Using cognitive labs to evaluate student experiences with the read aloud accommodation in math (Technical Report 67). Minneapolis, MN: University of Minnesota, National Center on Educational Outcomes. AcknowledgmentsThe National Center on Educational Outcomes (NCEO) with the state of South Dakota conducted research that informed the development of Technical Report 67. The authors of this report received assistance from many people to plan and implement the research. Authors also received assistance in drafting sections of this report. For their support, special thanks go to:

Table of Contents

Executive SummaryThe read aloud accommodation is frequently used on mathematics assessments, yet Individualized Education Program (IEP) teams often find it difficult to make appropriate decisions about this accommodation. The National Center on Educational Outcomes, with the South Dakota Department of Education, examined how students performed on a math test with the read aloud accommodation compared to how they performed without it. The study also explored students' perceptions of how the accommodation worked. One way to learn more about how students are using the read aloud accommodation is to conduct cognitive labs. A cognitive lab is a procedure in which a participant is asked to complete a task and to verbalize the cognitive processes that he or she engages in while completing the task. Cognitive labs were conducted with 24 grade 8 students who had the read aloud accommodation in math designated on their IEP. This small study found that although the read aloud accommodation did not have a significant impact on student performance, the students reported that the read aloud accommodation reduced their stress. Further, they perceived that it helped with comprehension and achieving the correct answer. Students also indicated that there sometimes is a disconnect between having the read aloud accommodation in math written into their IEP and actually receiving it. All students selected for this study should have received the read aloud at some point during instruction or testing. However, of the students tested, 17% reported that they had never previously used the read aloud accommodation. Results from this study can help inform local and state level policies and procedures. Appropriate selection and administration procedures can help ensure that students who need a read aloud accommodation in math will be able to show what they know and can do. Top of Page | Table of Contents IntroductionTitle I of the Elementary and Secondary Education Act of 2001 and the Individuals with Disabilities Education Improvement Act of 2004 require that all students, including students with disabilities, participate in state accountability systems. Accommodations play an important role in enabling some students with disabilities to participate in assessments used for accountability purposes by removing obstacles "immaterial to what the test is intended to measure" (Thurlow, Lazarus, & Christensen, 2008, p. 17). State policies provide information about which accommodations are appropriate to use on state tests (Thurlow et al., 2008). More than half of U.S. states report that at least 50% of elementary and middle school students with disabilities use accommodations in math (Altman, Thurlow, & Vang, 2010). An accommodation frequently allowed on state mathematics tests is items and directions read aloud (Thurlow, Lazarus, Thompson, & Morse, 2005). The read aloud accommodation is intended to provide access for students with disabilities who have difficulty decoding written text (Thurlow, Moen, Lekwa, & Scullin, 2010). It may require the use of human readers, audiotapes, or screen readers to present a test through auditory, rather than visual, means (Bolt & Roach, 2009). The National Center on Educational Outcomes (NCEO), in collaboration with the South Dakota Department of Education, explored issues related to the read aloud accommodation in math. The current study used cognitive labs to explore how students responded to the read aloud accommodation through narration of students' thought processes as they solved mathematics problems with and without the read aloud accommodation. A companion study used focus group methodology to explore read aloud accommodation practices from the test administrators' perspectives (Hodgson, Lazarus, Price, Altman, & Thurlow, 2012). The read aloud accommodation in mathematics has been studied at both the assessment level and at the item level with mixed findings (Cormier, Altman, Shyyan, & Thurlow, 2010; Johnstone, Altman, Thurlow, & Thompson, 2006; Rogers, Christian, & Thurlow, 2012; Zenisky & Sireci, 2007). For example, Elbaum (2007) compared the performance of students with and without learning disabilities on mathematics assessments where the read aloud accommodation and a standard administration were used. This study found that elementary students with learning disabilities benefited significantly more from the read aloud accommodation than other students; however, the inverse was true for older students. While several previous studies investigated group performance when the read aloud accommodation in math was used, little is known about how individual students who use the read aloud accommodation in mathematics interact with the items. The purpose of this study was to examine the cognitive processes of individual students as they solved mathematics problems with and without the read aloud accommodation. The specific research questions we sought to answer were:

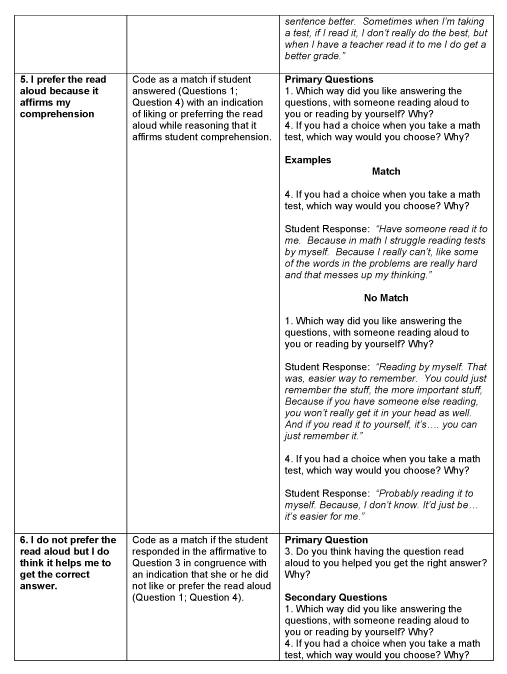

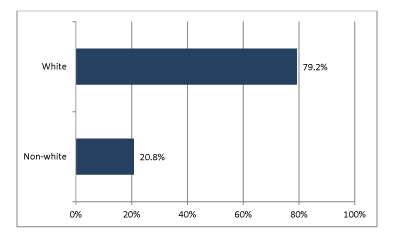

Top of Page | Table of Contents MethodThis study used cognitive labs to investigate student responses to the read aloud accommodation. Cognitive labs, also known as Think Alouds, have been used in the fields of psychology and technology for many years. A cognitive lab is a procedure whereby a participant is asked to complete a task and to verbalize the cognitive processes that he or she engages in while completing the task. Cognitive labs have been used to examine issues in test design in the development of universally designed large-scale assessments (Johnstone, Altman, & Thurlow, 2006) and in examining the appropriateness of tests designed for sub-populations of students, such as students with disabilities and English language learners (Johnstone, Bottsford-Miller, & Thompson, 2006). ParticipantsDistricts that might be interested in participating in this study were identified by the South Dakota Department of Education. We then contacted the districts and three agreed to participate in the study. Twenty-four eighth grade students were selected to participate in this study. All had the read aloud accommodation in mathematics on their IEPs. As shown in Figure 1, most students who participated in the study were White (79.2%). Almost 21% were from a racial or ethnic minority. Figure 1. Race/Ethnicity of Study Participants

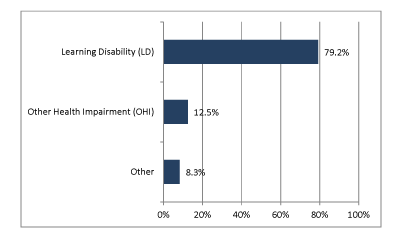

As shown in Figure 2, most participants were students with a specific learning disability (LD) (79.1%). About 8% of students with LD also had an emotional-behavioral disorder (EBD). More than 12% of the students had other health impairments (OHI). Figure 2. Primary Disability Category of Study Participants

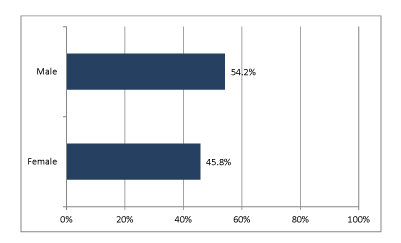

*8.3% of students with LD also had emotional, behavioral disorders (EBD). As shown in Figure 3, about 54% of student participants were male and about 46% were female. Figure 3. Gender of Study Participants

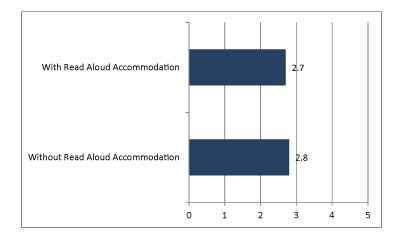

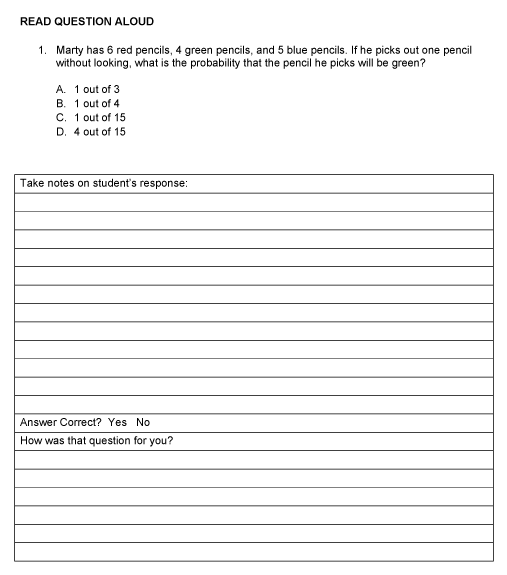

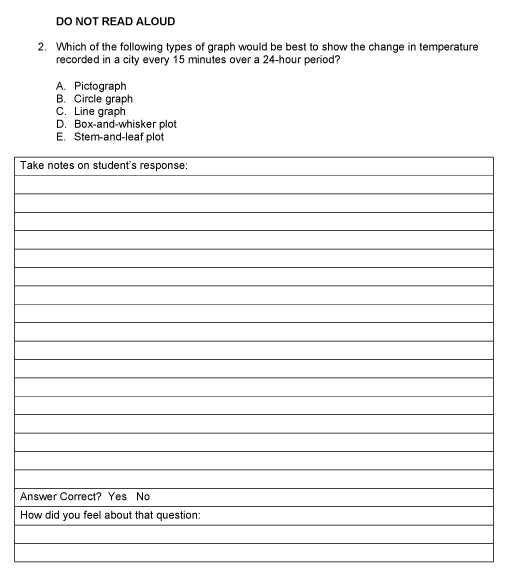

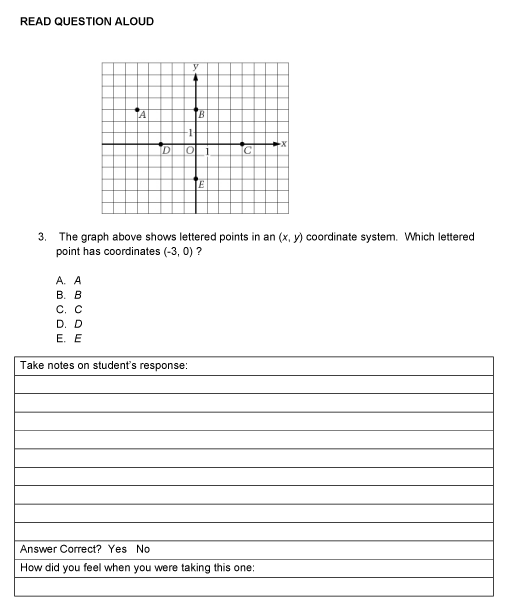

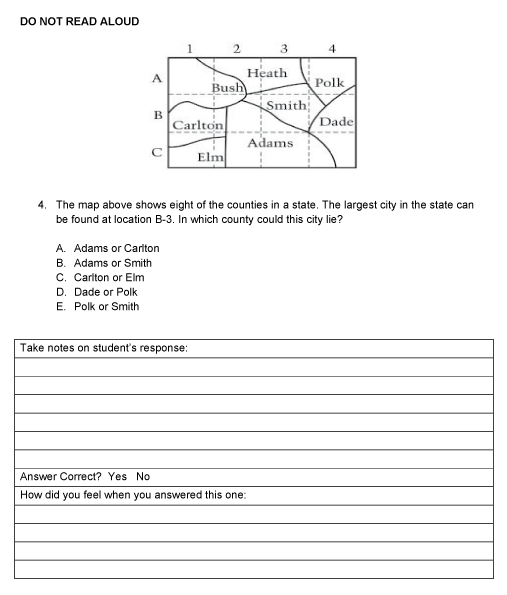

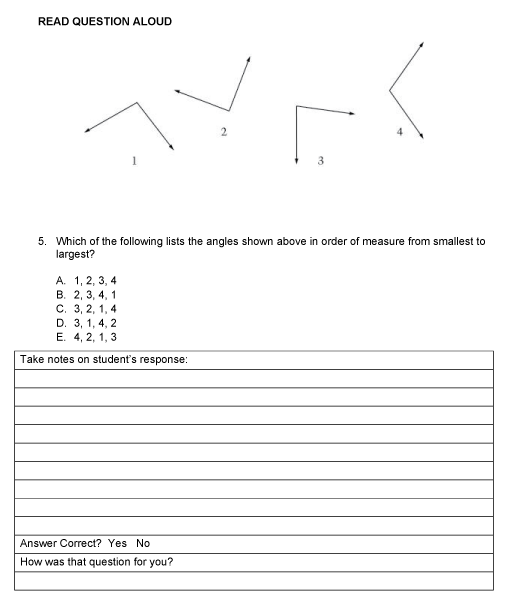

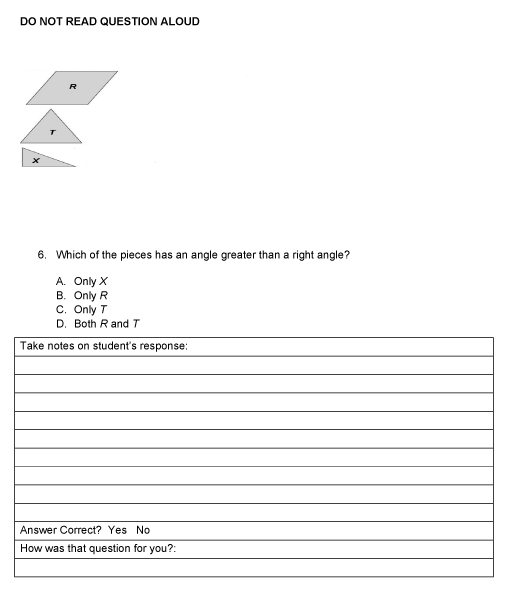

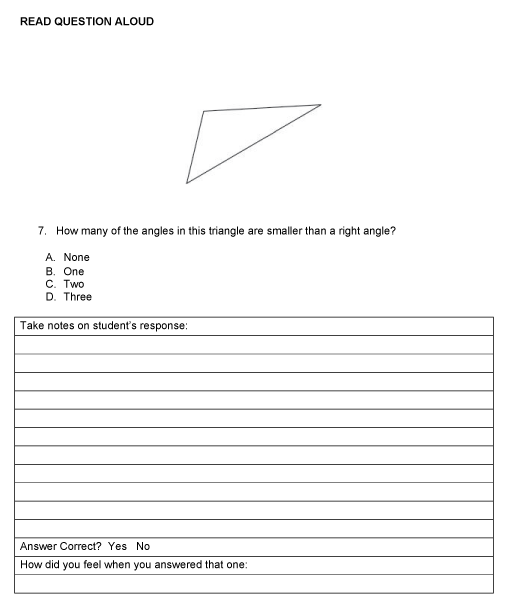

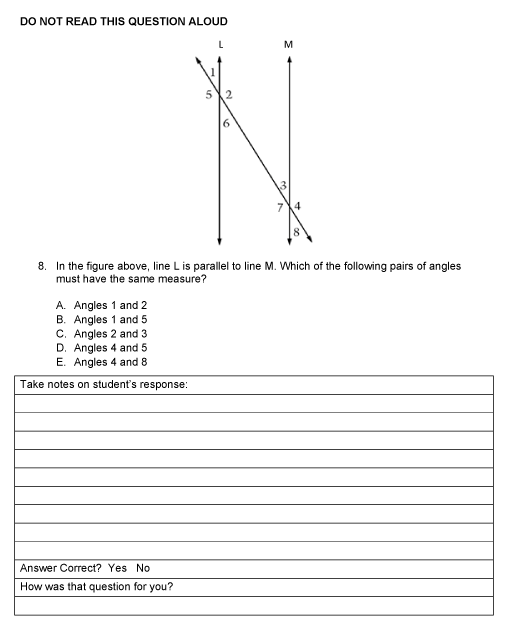

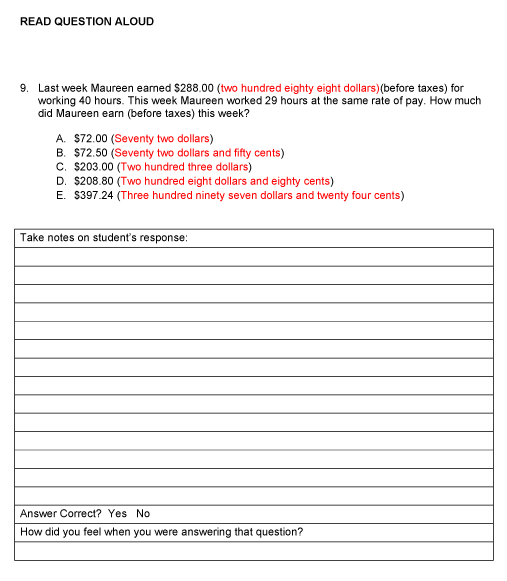

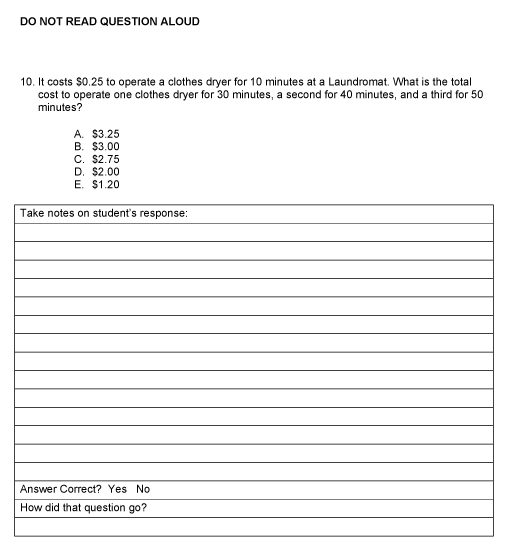

Processes and ProceduresDuring site visits, the team secured a private room and set up a video camera. Students were escorted individually by their teachers to the room. After ensuring that the student's parental permission form was signed, the student was read an assent form. The assent forms assured the students that participation was voluntary and that they could stop at any time and still receive the honorarium ($30.00 gift card). Students were informed that they were being both video- and audio-taped, and were provided assurances of how those records would be securely held and eventually destroyed. The researchers administering the cognitive labs asked each student to repeat the salient parts of the assent form, and if the student could articulate the purpose of the study, and his or her right to terminate the session at any time without penalty, the form was signed by the student and the protocol was administered and recorded. All 24 students completed the protocol with the exception of one student who did not complete the last two items (i.e., items 9 and 10). Some students did not select an answer option for every question—and instead sometimes moved on to the next question without responding to the previous item. Data from all students was included in the analysis, even if they did not response to all items. The protocol that was used in this study is in Appendix A. The protocol includes both the script that the researchers used and the math items. Ten multiple choice items were selected for the Read Aloud Protocol. These items were drawn from the National Assessment of Educational Progress (NAEP) released items from 2005 to 2011 (http://nces.ed.gov/nationsreportcard/itmrlsx/search.aspx?subject=mathematics). The South Dakota Mathematics Assessment (i.e., Dakota Test of Educational Progress (DSTEP)-Math) is comprised solely of multiple choice items, so only multiple choice items were used in this study. All 10 of the items were designated by NAEP as "easy" items with a "low complexity" level, with the exception of the last pair of items (items 9 and 10), which were designated as "easy" items with a "moderate complexity" level. Item pairs were matched for the following five content areas: data analysis and probability, algebra, measurement, geometry, and number properties and operations. Item pairs also were matched on a number of other criteria including the percentage correct statistic (all selected paired items being within 8-12 percentage points of each other) and the number of words in the item stem. Finally, items were examined for similarity in the types of questions that were asked. The items were put into two protocols, Form A and Form B. In Form A, the odd number in each item pair was read aloud to the student and the even number in each item pair was read by the student. Conversely, in Form B, the even number in each item pair was read aloud to the student and the odd number in each item pair was read by the student. The students were asked to verbally read the non-accommodated questions so that the researchers could see whether there were reading errors. In addition to the items, the protocol included a script that was followed by the researchers administering the read aloud items. Students were asked to verbalize their thoughts during both conditions (i.e., accommodated, non-accommodated). The cognitive lab administrators encouraged them to say what they were thinking by using phrases such as, "tell me what you're thinking right now," or "what are you thinking about," when the student was silent for more than five seconds. After each item was completed, students were asked a question meant to elicit the students' perceptions and emotional responses. For example, students were asked, "How was that question for you?" After completing the cognitive lab portion of the protocol and answering all of the mathematics problems, students completed a post-assessment survey on the read aloud accommodation. Coding and Analysis of DataCoding development was a process analysis of the verbalizations students made while attempting test items. This analysis was completed by reading transcriptions of the cognitive lab for each student who participated in the study, watching video tapes, and coding the major processes of test item completion while reviewing protocols. We began the coding process by noting which behaviors, cognitive processes, and emotional responses provided the most evidence for holistically capturing the experiences of students. First, student test-taking experiences were categorized as subcomponents of the test-taking process and were drafted onto a coding form for use in analysis. The following subcomponents were identified as important: correctness of student responses, number of reading errors, time per question, and emotional responses to each item presented: For those items that were not accommodated (i.e., the students read the item aloud themselves), the number and types of reading errors were recorded. In counting the reading errors, two rules were put into place. The first rule was that errors would be counted only on the first occurrence of a particular error for a given test item. For example, if the student pronounced a word incorrectly, and used the incorrect pronunciation throughout the item, that was counted as one error. The second rule for counting errors was that if the student self-corrected while reading, that error was not counted. Students' reading errors were reported by counting the number of unique errors (not the same error multiple times) and types of reading errors were noted: fluency errors if a student repeated a word or stumbled on pronunciation; phonetic errors if a student used a phonetic strategy to read an unfamiliar word, but did not sound it out correctly; and an attention to detail error if the student hurried through the sentence and read what he or she predicted the content would be. Time per question was calculated from the time the reading of the item was completed to the time the student terminated the question by selecting an answer or moving on to the next item. Immediately following each item, the examiner asked each student a question to elicit his or her thoughts about the item. Most students responded in terms of the items relative ease for them. Generally these comments were related to how easy it was for the student to answer the item. The student's perceptions of the items were coded using a 3-point scale (1=easy, 2=medium, and 3=hard). Second, question-specific codes were developed for the responses to the post-assessment survey question asked at the end of the test taking experience (see the Results section for details). Several iterations of coding sheets were drafted with team member input before a final version was chosen. Coding sheets were evaluated multiple times to ensure that they met the needs of this project. Team members were then trained on how to use the coding sheets and all cognitive lab transcriptions were coded by multiple team members to ensure inter-rater reliability. At least two team members coded the data for each student. Discrepancies were discussed and finalized codes were determined by a separate two-member team. Coded data were analyzed both quantitatively and qualitatively. Overall responses were coded and descriptive statistics were calculated. For example, items were analyzed for correct/incorrect responses, total time spent per item, and reading related issues such as number of reading errors per item. Qualitative information was analyzed using the methodology described in Bogdan and Biklen (1992). All direct quotes from students were transcribed and coded into generalized themes (Van Someren, Barnard, & Sandberg, 1994). Top of Page | Table of Contents ResultsStudent Performance and the Read Aloud AccommodationIn both protocols (i.e., Form A and Form B), each student was presented with a total of ten items: five questions that were read aloud to the student and five questions that were not. Figure 4 compares the mean scores of the 24 participants by testing condition (i.e., with read aloud accommodation, without read aloud accommodation). The mean number of items correct out of the ten questions was 5.5. The average score for items that were read aloud to the student (2.7) was slightly lower than the average score without the accommodation (2.8). Figure 4. Mean Number of Items Correct With and Without the Read Aloud Accommodation

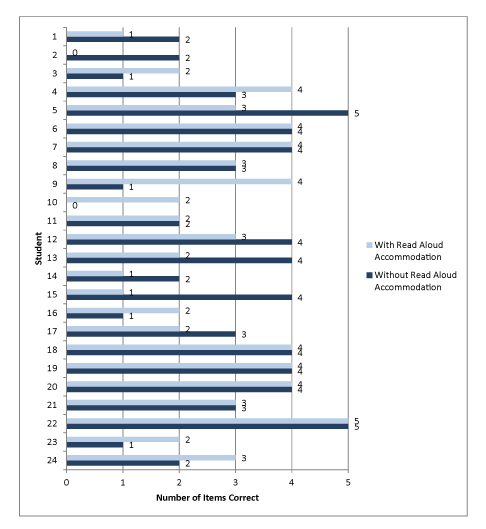

A Wilcoxon Signed Ranks Test was performed to determine if there was a significant difference between students' performance on items that were read aloud versus items that were not read aloud. Significance levels were determined using an alpha level of .05. Results indicated that the read aloud accommodation did not have a significant effect on student performance (Z = -.525, p =.599). Figure 5 shows individual student responses by items and test condition. Overall, the number of items correct across the two conditions ranged from 2 to 10 items. Seven students performed better on items that were read aloud with one to two more items correct with the read aloud accommodation. Eight students performed better on items when they did not receive the accommodation. Performance was the same for both conditions for nine students. Figure 5. Number of Items Answered Correctly by Individual Students* With and Without the Read Aloud Accommodation

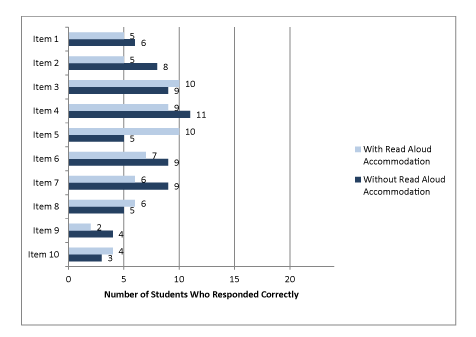

*The student data are reported in a random order in this figure (i.e., it is not in the order that the cognitive labs were administered). Figure 6 presents data on student performance for the number of items correct by condition. Overall, students performed better on four items with the read aloud accommodation (i.e., items 3, 5, 8, and 10). Refer to Appendix A to see the actual items. Students performed better on six items when they did not receive the read aloud accommodation. Items 3 and 4 were the easiest items for the students, and most students selected the correct answers regardless of whether those two items were accommodated. Items 9 and 10 were the most difficult. Figure 6. Number of Students Who Answered Each Item Correctly With and Without the Read Aloud Accommodation

Students were categorized based on how many items they answered correctly under both conditions (i.e., read aloud accommodation, no read aloud accommodation). If a student responded correctly to 3-5 items under a given condition the student was considered a "high" scoring student for that condition. If a student answered 0-2 items correctly under a given condition he or she was considered a "low" scoring student under that condition. As shown in Table 1, 8 of the 24 students scored at the low level with the read aloud accommodation and they also scored at the low level when the item was not accommodated. Eleven students scored at the high level with the accommodation and also at the high level when they did not receive the accommodation. Three students scored low when the items were read aloud and high when they did not receive the accommodation. And, two students scored high when the items were read aloud, but low when they did not receive the accommodation. Table 1. Number of Students Who Performed at Selected Levels With and Without the Read Aloud Accommodation

Note: High=score of 3-5 items correct; Low=score of 0-2 correct Reading ErrorsAs noted in the Processes and Procedures section, reading errors were coded as one of three types: fluency (when a student stumbled on the correct pronunciation or repeated a word or part of a word more than once), phonetic (when a student used a phonetic strategy to read an unfamiliar word, but the strategy was incorrect), and attention to detail (when students fluently read and correctly pronounced words that were not in the sentence, but would fit into the sentence context). Based on the previous categorization of students into groups by performance with and without the read aloud accommodation, Table 2 shows the average total number of reading errors for each of the four groups. Students who scored low both with and without the read aloud accommodation had an average of 6.5 reading errors. Students who scored high when the items were read aloud, but low when they did not receive the read aloud accommodation, had an average of 13.5 reading errors for the items they read themselves. This group had the most errors in comparison to the other groups. The students who scored low when they received the read aloud accommodation but high without it made an average of 5.0 reading errors. This group had the fewest reading errors when reading the items by themselves. Students who scored high both with and without the read aloud accommodation had an average of 11.0 reading errors. Table 2. Total Number of Reading Errors by Score Group

Table 3 shows the average amount of time students took to answer each item by score level group. Students who scored at the low level both with and without the read aloud accommodation took an average of 0.9 minutes per item to respond with an average time of 0.8 minutes per item for the accommodated items and an average of 0.9 minutes for the non-accommodated items. Students who scored high with the read aloud accommodation and low without it took slightly more time to complete an item (1.1 minutes) than the other groups. Table 3. Average Time per Item by Condition and Score Level

|

||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||

| Student Groups | Mean Total Score+ | Mean Math Self-Rating* |

|---|---|---|

| 1. Low/Low Low with the Read Aloud Accommodation/ Low without the Read Aloud Accommodation |

2.9 | 5.1 |

| 2. High/Low High with the Read Aloud Accommodation/ Low without the Read Aloud Accommodation |

5.0 | 5.5 |

| 3. Low/High Low with the Read Aloud Accommodation/ High without the Read Aloud Accommodation |

5.3 | 5.0 |

| 4. High/High High with the Read Aloud Accommodation/ High without the Read Aloud Accommodation |

7.6 | 6.9 |

* Student self- rating scale of how good they are at math on a scale of 1 to 10 with 10 being

"great" and 1 being "really bad."

+ The total score includes both accommodated and non-accommodated items.

Perceptions of Individual Items. Immediately following each item, the researcher administering the cognitive lab asked each student a question to elicit his or her feelings toward the item. As described in the Processes and Procedures section, the student's perceptions of the items were coded using a 3-point scale (1=easy, 2=medium, and 3=hard). Table 5 presents the mean emotional response of student groups for items completed with or without the read aloud. Across the two conditions (i.e., accommodated, not accommodated), the mean responses for all groups remained near the middle of the scale with most students indicating that most items were of medium difficulty. For example, students who scored low both with or without the read aloud accommodation perceived, on average, that the items had a difficulty level of 2.0. For three out of the four score level groups, students perceived that items were more difficult when they did not receive the read aloud accommodation.

Post-Assessment Survey. Immediately after completing the cognitive lab, students were polled using the post-assessment survey questions to get a better understanding of their feelings about the read aloud accommodation. The survey questions are indicated at the top of Figures 7-13 and display the percentage of students who gave each response.

Table 5. Student Perceptions of Item Difficulty with and without the Read Aloud Accommodation by Score Level Group

| Student Groups | Mean Perceived Difficulty* | |

|---|---|---|

| With Read Aloud Accommodation | Without Read Aloud Accommodation | |

| 1. Low/Low Low with Read Aloud Accommodation/ Low without Not Read Aloud Accommodation |

2.0 | 2.3 |

| 2. High/Low High with Read Aloud Accommodation/ Low without Not Read Aloud Accommodation |

2.1 | 2.4 |

| 3. Low/High Low with Read Aloud Accommodation/ High without Not Read Aloud Accommodation |

2.1 | 1.7 |

| 4. High/High High with Read Aloud Accommodation/ High without Not Read Aloud Accommodation |

1.8 | 2.2 |

*1=Easy, 2=Medium, 3=Hard

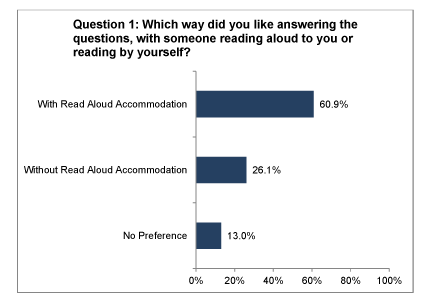

According to Figure 7, the majority of students who participated in this study preferred the read aloud accommodation over reading the items themselves. Many students who preferred the read aloud accommodation indicated that they selected it either because of a perception that it improved comprehension or because of a general perception that answering the item was easier with the read aloud accommodation. Student responses included:

"You're reading it to me. Because that seemed a lot easier."

"It's better to listen to somebody when they read it than read it to yourself. It gives you time to think."

"Because, sometimes when people read out loud, they kind of give you, they really give you kind of an example and sometimes I can get it."

Figure 7. Student Preference for Read Aloud Versus Not Read Aloud

Students who preferred reading the items by themselves indicated that they felt that reading alone improved their comprehension. Some indicated that they had a preference for self-pacing which they thought the read aloud accommodation did not allow. Still others expressed a need to re-read the question after hearing it read aloud and therefore preferred to read the items by themselves. Some typical responses were:

"Reading by myself. That was an easier way to remember. You could just remember the stuff, the more important stuff, because if you have someone else reading, you won't really get it in your head as well. And if you read it to yourself, it's…. you can just remember it."

"Reading by myself. Well, because when I'm reading by myself, normally when I'm just alone, I can get done a lot faster, and I don't have to wait and do them in class with the teacher. I can just sit by myself and do them."

A small percentage of students expressed no preference when asked whether they preferred the read aloud to reading alone:

"A little of both. Because, if I'm reading it out loud I can kind of remember it more than if someone read it to me, but it helps equally."

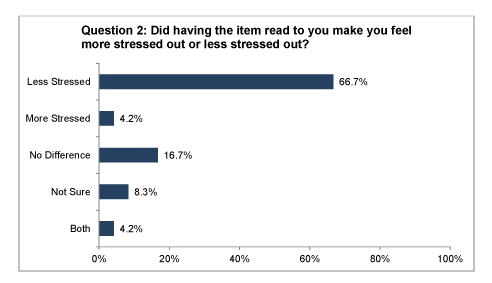

Figure 8 illustrates that in response to a question about whether the read aloud accommodation increased or decreased their stress, the majority of students who participated in the cognitive labs felt less stress when provided with the accommodation. Typical responses included:

"Less stressed. That makes it make more sense."

"Less . . . you get to thinking while they're reading it to you."

"Less. So I wouldn't have to struggle with some words."

Two students stated that they had more stress when items were read aloud. These students indicated that they were more stressed either because they did not understand the question or because they thought that reading it aloud added pressure by providing a time limit for each item and preferred to self-pace. For example, one student said, "I just like to read things myself so I can understand them."

Figure 8. Student Perception of Stress

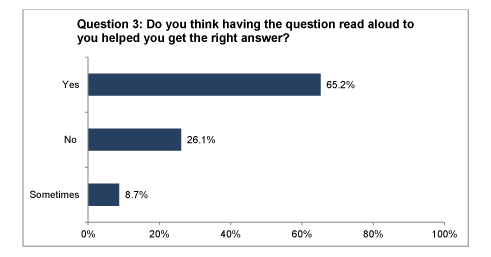

As shown in Figure 9, almost two-thirds of the students thought that the read aloud accommodation helped them to select the correct answer. In general, most students perceived that the read aloud improved their comprehension. Some students thought that the read aloud accommodation provided hints to the correct answers. Others believed that the pacing of the read aloud accommodation helped them illicit the correct responses. Student responses included:

"Because whenever we read it, it gave me a hint in the paragraphs about, what the question could be about and how to add and subtract them all."

"I was able to understand it better."

"Because in some ways it helped me understand it more."

"Sometimes I mess up if I read too fast, and I'll get it wrong. But sometimes if a teacher would read it or something, and they go slow, and I'll figure it out."

Figure 9: Student Perception of the Helpfulness of the Read Aloud Accommodation

Of the students tested, 26% did not think the read aloud accommodation helped them get the correct answer. These students generally gave the rationale that they needed to solve the problems themselves anyway, so the read aloud accommodation was not helpful:

"I don't, not really, because they're not really telling me what do, I still have to figure it out."

"No, because it's just reading, they're not really helping you, just reading."

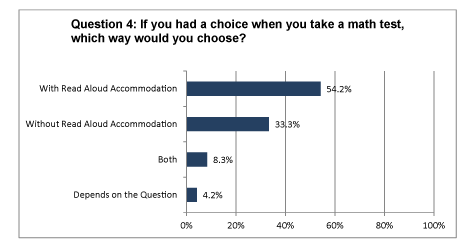

The students were asked whether they preferred to have math tests read aloud. As shown in Figure 10, slightly more than half of the students preferred to have math tests read aloud. Similar to previous questions, most students who preferred to have math tests read aloud believed that it improved comprehension or that it helped them get the right answer. Some typical responses were:

"Read it out loud to me. Because I get more of a picture in my head than I do when I read it to myself."

"Reading it out loud. You have time to think."

"A little bit of both. It's a lot easier to read it by yourself than have someone read it to you but I like it better when somebody reads to me."

"Have it read out loud. So you can figure out the question and get it, memorize it."

Figure 10: Student Preference for Read Aloud Accommodation in Math

The students who preferred not to have tests read aloud indicated that they believed reading the tests by themselves improves comprehension, preferred self-pacing, or felt socially uncomfortable with the read aloud. Typical reasons included:

"I'll read myself."

"Not read aloud. Because I'm going to be more uncomfortable than just reading it by myself."

"Read it by myself. Because if she says it too fast or something . . . and sometimes they don't re-read it."

"Probably not the read aloud. Because then I can go back through and read it a few times."

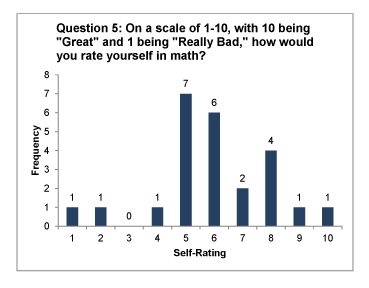

As previously noted, students were asked to rate themselves on a scale of 1 to 10 on how good they were at math with 1 being "really bad" and 10 being "great"(see Figure 11). The mean student self-rating in math was 6.0. Examples of student responses are below:

"8 – Because I struggle with it."

"5 – Because I'm just pretty average."

"6 – Because it's kind of hard, some stuff's kind of hard and then some of it's really easy."

"6 – Because I didn't do too well on some tests, but I try to retake them."

"5 –Because I'm not that great at math most of the time."

Figure 11. Student Self-rating in Math

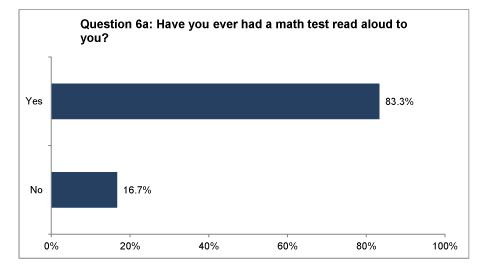

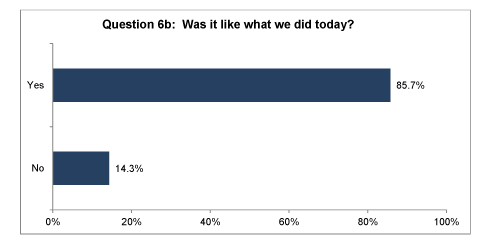

Teachers were asked to identify only students who had the read aloud accommodation in math already designated on their IEPs for participation in this study because the purpose was to evaluate the efficacy and appropriateness of the accommodation for these students. As a result, students were chosen for whom the read aloud had already been deemed an appropriate accommodation. To verify that students had previous experience with the read aloud accommodation, we asked students whether they had ever previously had a math test read to them. As shown in Figure 12, most students (83.3%) reported that they had previously had a math test read aloud. Surprisingly, almost 17% of students reported never having a math test read aloud. According to Figure 13, about 86% of the students said that their experiences during the cognitive lab was similar to their previous experiences with the read aloud accommodation. Student responses included:

"Yes, they read them to me on Dakota STEP Test but not in the classroom, I don't get read to."

"Yeah. Kind of [like what we did today]. Because she was reading the questions to me and… she was explaining them."

"I don't think so, no. Oh yeah. That math was read to me [on the Dakota STEP]. I don't know, I guess it's the same because you both couldn't like tell me the answer, you could just read it. So I'd say it's the same."

"I don't know. I think so. Yeah, they just read the whole math test."

"No. I have language and reading tests read to me (but not math)."

"No, I haven't, not since I started middle school."

Figure 12. Students' Prior Experience with the Read Aloud Accommodation

Figure 13. Similarity of Prior Experience with Testing Protocol

Student Preferences. As we looked at the student responses to the post-assessment survey, we began to see patterns emerging. In some cases, students outright contradicted themselves. For example, in response to Question 1 (Which way did you like answering the questions, with someone reading aloud to you or reading by yourself?), almost 61% chose the read aloud. Later, in response to Question 4 (If you had a choice when you take a math test, which way would you choose?), only 54% of the students indicated that they preferred to have tests read aloud. The picture painted is multifaceted: Overall, six students (25%) contradicted themselves when answering Questions 1 and 4, which are similar in nature. For example, three students responded that they liked the read aloud relative to Question 1, but then said they would choose to not have the question read aloud in response to Question 4. Another student said he did not prefer the read aloud in response to Question 4, yet said he liked it in response to Question 1. And, another student indicated "no preference" in response to Question 4, but liked the read aloud when answering Question 1. Also, within responses to a particular question, such as Question 3 (Do you think having the question read aloud to you helped you get the right answer?), a few students showed preferences for both the read aloud accommodation and taking the test without the read aloud accommodation. For example:

Researcher: Do you think having the question read aloud to you helped you get the right answer?

Student: Yeah, kind of. When I read it to myself, I got it a little bit more, but, yeah, it helped a little.

This student expressed two preferences simultaneously. He thought that the read aloud helped him select the right answer, and also indicated that when he read it to himself, he understood it a little better. Earlier in conversation with this same student regarding post-assessment survey Question 1 about which way he likes better, he said, "Reading by myself. That was, an easier way to remember." We see other signs of complexity in the emotional response of this same student later in the same conversation:

Researcher: Did having the item read to you make you feel more or less stressed out would you say?

Student: Less stressed out.

Researcher: And why is that?

Student: I don't know, it's just… I'm not like, one of those people that are really shy….

Researcher: Mm hmm.

Student: I don't know, it's just… I can listen to them say it, and it'll just be like another thing for me to remember, so I have myself reading it, and them reading it.

Researcher: So it was….

Student: So I'm….

Researcher: I want to make sure I understood, so it's less stressful to have it read?

Student: Yeah.

Researcher: OK. . . And just repeat one more time why.

Student: Just because, like, you know you're right. You know you can read it over again from someone else's perspective and know you read it the right way. And you can understand it.

We ascertained that this student thought that having a teacher reading aloud to him must be helping him (reducing his stress and helping him get the correct answer), however, he performed neither better nor worse with the read aloud accommodation having given four correct answers correct with the read aloud accommodation and four correct answers without it.

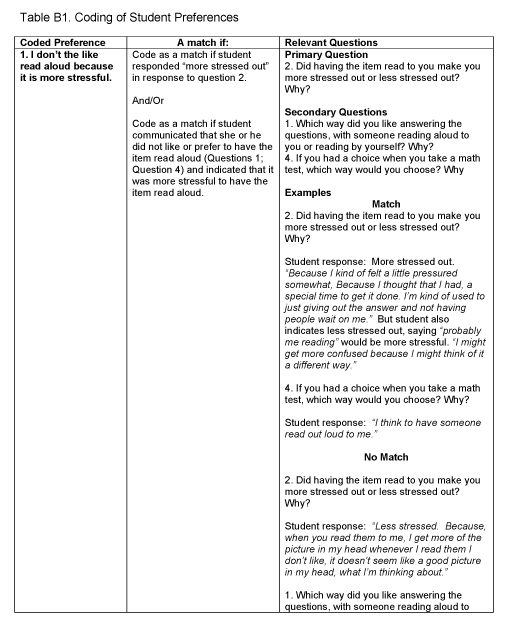

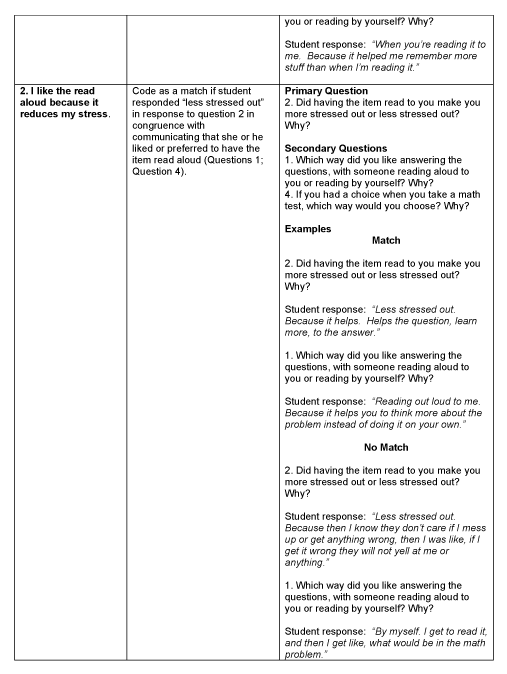

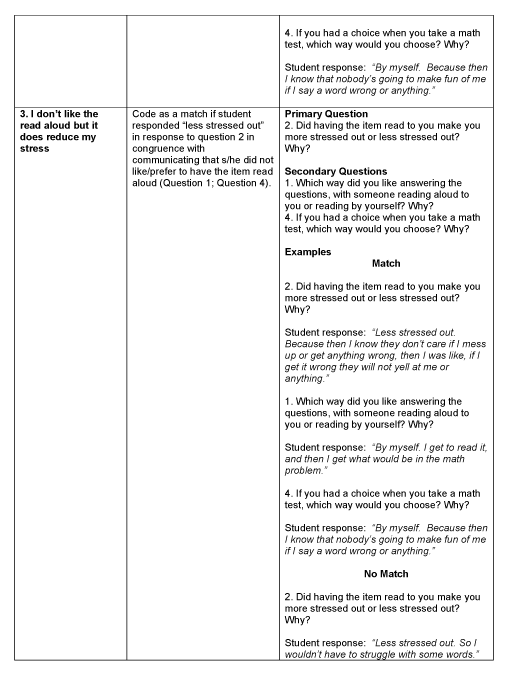

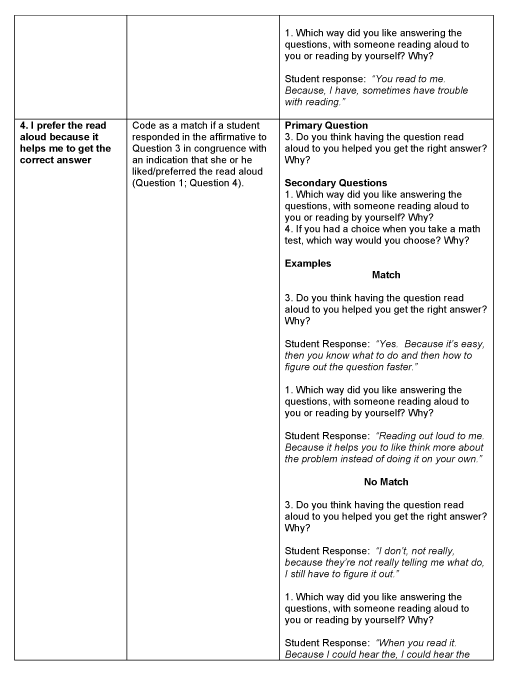

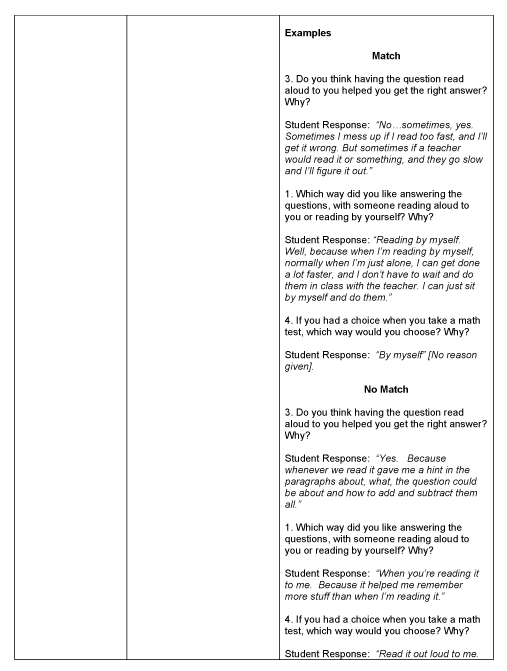

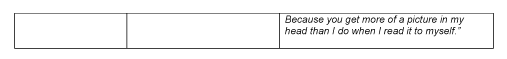

There are many other student examples like this of seeming contradictions which, upon further investigation and probing, were explained further by the students. In order to summarize the complexity of qualitative responses on student preferences, a coding system was devised in which the researchers coded students as matching six kinds of preferences. These preferences captured students' holistic experiences and were a valuable tool to begin assessing the relationships between emotional responses (i.e., stress) and accommodation preferences. The student preferences that emerged in this analysis were:

1. I don't like the read aloud because it is more stressful.

2. I like the read aloud because it reduces my stress.

3. I don't like the read aloud but it does reduce my stress.

4. I prefer the read aloud because it helps me to get the correct answer.

5. I prefer the read aloud because it affirms my comprehension.

6. I do not prefer the read aloud but I do think it helps me to get the correct answer.

See Appendix B for each coded preference and the relevant criteria for a student having a match (yes) or not (no) for each preference, along with examples of the kinds of student statements that would elicit a match. It was possible for a student to match preferences that seemed contradictory in nature. For example, some students had a preference for the read aloud accommodation because they thought it helped with comprehension (Match 5), and indicated that they liked the read aloud because it reduced stress (Match 2); but also indicated that it was stressful to have the read aloud accommodation because they thought that they were being rushed and the teacher was "waiting on them" (Match 1).

As shown in Table 6, student perceptions of the helpfulness of the read aloud accommodation varied. For example, across all students who participated in this study, 58% indicated that they liked the read aloud accommodation because it reduced their stress (Preference 2). Of the lowest performing students (low scoring both with and without the read aloud accommodation), 63% (5 students) expressed this opinion.

Table 6. Coded Student Perceptions of the the Read Aloud Accommodation by Score Group

| Student Preference Matches | All Students | 1. Low/Low Low with Read Aloud Accommodation/ Low without Read Aloud Accommodation |

2. High/Low High with Read Aloud Accommodation/ Low without Read Aloud Accommodation |

3. Low/High Low with Read Aloud Accommodation/ High without Read Aloud Accommodation |

4. High/High High with Read Aloud Accommodation/ High without Read Aloud Accommodation |

| 1. I don't like the read aloud because it is more stressful | 16.7% | 0.0% | 0.0% | 33.3% | 27.3% |

| 2. I like the read aloud because it reduces my stress | 58.3% | 62.5% | 50.0% | 66.7% | 54.5% |

| 3. I don't like the read aloud but it does reduce my stress | 12.5% | 0.0% | 0.0% | 0.0% | 27.3% |

| 4. I prefer the read aloud because it helps me to get the correct answer | 50.0% | 62.5% | 50.0% | 33.3% | 45.5% |

| 5. I prefer the read aloud because it affirms my comprehension | 70.8% | 87.5% | 50.0% | 66.7% | 63.6% |

| 6. I do not prefer the read aloud but I do think it helps me to get the correct answer | 21.7% | 0.0% | 0.0% | 33.3% | 36.4% |

Another example is Preference 5. Some students also indicated a preference for the read aloud because they thought the read aloud affirmed their overall comprehension of the items. Of the lowest performing students with or without the read aloud accommodation, 88% expressed this opinion. At least 50% of students in other scoring groups also believed that the read aloud accommodation improved their comprehension.

Top of Page | Table of Contents

Discussion

This study explored how students responded to the read aloud accommodation with mathematics items and examined the impact of the read aloud accommodation on student performance. Through the use of cognitive labs, we were able to gather "in-the-moment" data on individual student reactions and responses to the read aloud accommodation. We also collected data about students' perceptions of the read aloud accommodation in mathematics.

The results indicated that, for the group of students who participated in this study, there was not a significant difference between student performance on items with the read aloud accommodation when compared to items without the accommodation. However, a subgroup of the students received relatively high scores regardless of whether they received the read aloud accommodation and another subgroup appeared not to know the content and scored poorly regardless of whether they received the accommodation.

Although the read aloud accommodation did not significantly impact student performance, it did appear to positively influence students' experiences during test taking. The majority of students reported that the read aloud accommodation reduced the stress they felt while testing and helped them to get the right answer. Analysis of student responses also revealed that the majority of students felt that the read aloud accommodation improved their comprehension of the items. Furthermore, more than half of all student participants indicated that given the choice, they would choose the read aloud accommodation. However, some students did not like the read aloud accommodation and thought the accommodation gave an added sense of pressure, expectation, frustration, or judgment (i.e., having people waiting on them, having to wait for the administration of the read aloud, not being able to self-pace).

Although the current study addressed an existent gap in read aloud accommodation literature by investigating the read aloud accommodation from the point of view of the student, it is important to recognize the limitations of this study. It was a very small study with only 24 students participating so it is impossible to generalize from these results. This study required students to read the math items out loud to the researchers and to verbalize their thought processes while attempting to solve the math items. Also, every other item was read aloud to the student in this study. This does not simulate a typical test-taking experience with the read aloud accommodation and may have affected the study results. The post-assessment questions involved self-report responsesthat may have been affected by social desirability bias. Additional research investigating the read aloud accommodation from the student perspective is needed to confirm the findings of this study.

The findings of this study indicate a need for improved practices. Recommendations include:

- Provide training to IEP team members on how to select, implement, and evaluate the use of the read aloud accommodation on math assessments. The study results suggest that some of the students who received the read aloud accommodation in math may not have needed the accommodation. For example, some of these students did very well on the math items regardless of whether they received the read aloud accommodation.

- Develop and provide training on guidelines about how to administer accommodations that involve a third party. Several students said that they liked the read aloud accommodation because it gave them hints to the correct answer. The read aloud accommodation often introduces human variability into the testing situation. Guidelines can help ensure that the accommodation is delivered appropriately.

- Develop policies and procedures that help ensure that students receive the accommodations that are on their IEPs. All participants in this study had the read aloud accommodation designated on their IEP. It is a concern that only 83.3% of students reported previously receiving the read aloud accommodation in math. Sometimes, accommodations decisions may fall apart on test day due to logistical issues, space considerations, or human factors. SEAs and LEAs need to develop policies and procedures that will help ensure that students receive the accommodations that are on their IEPs. Both the Elementary and Secondary Education Act and the Individuals with Disabilities Education Act require that students be provided the accommodations that are on their IEPs.

- Involve students in the accommodation decision-making process. The students in this study made it clear that there were things that they liked about the read aloud accommodation in mathematics, but that there also were problems. Students offer a valuable perspective into their own needs.

- Ensure that all students, including students who use the read aloud accommodation in math, have the opportunity to learn grade-level content. Several students who participated in this study did poorly on the assessment items that we used in the study with or without the read aloud accommodation. The items that we used in this study were NAEP release items, and may not have completely aligned with South Dakota standards. Still, this finding is of concern because it suggests that some students may not have had the opportunity to learn grade-level content.

Top of Page | Table of Contents

References

Altman, J. R., Cormier, D. C., Lazarus, S. S., & Thurlow, M. L. (2012). Tennessee special education teacher survey: Training, large-scale testing, and TCAP-MAAS administration (Technical Report 61). Minneapolis, MN: University of Minnesota, National Center on Educational Outcomes.

Altman, J. R., Thurlow, M. L., & Vang, M. (2010). Annual performance report: 2007-2008 state assessment data. Minneapolis, MN: University of Minnesota, National Center on Educational Outcomes.

Bogdan, R. C., & Biklen, S. K. (1992). Qualitative research for education (2nd ed.). Boston, MA: Allyn & Bacon.

Bolt, S. E., & Roach, A. T. (2009). Inclusive assessment and accountability: A guide to accommodations for students with diverse needs. New York, NY: Guilford Press.

Christensen, L. L., Braam, M., Scullin, S., & Thurlow, M. L. (2011). 2009 state policies on assessment participation and accommodations for students with disabilities (Synthesis Report 83). Minneapolis, MN: University of Minnesota, National Center on Educational Outcomes.

Cormier, D. C., Altman, J. R., Shyyan, V., & Thurlow, M. L. (2010). A summary of the research on the effects of test accommodation: 2007-2008 (Technical Report 56). Minneapolis, MN: University of Minnesota, National Center on Educational Outcomes.

Elbaum, B. (2007). Effects of an oral testing accommodation on the mathematics performance of secondary students with and without learning disabilities. The Journal of Special Education, 40(4), 218-229.

Hodgson, J. R., Lazarus, S. S., Price, L. M., Altman, J. R., & Thurlow, M. L. (2012). Test administrators' perspectives on the use of the read aloud accommodation in math on state tests for accountability (Technical Report 66). Minneapolis, MN: University of Minnesota, National Center on Educational Outcomes.

Johnstone, C. J., Altman, J. R., & Thurlow, M. L. (2006). A state guide to the development of univesally designed assessments. Minneapolis, MN: University of Minnesota, National Center on Educational Outcomes.

Johnstone, C. J., Altman, J., Thurlow, M. L., & Thompson, S. J. (2006). A summary of research on the effects of test accommodations: 2002 through 2004 (Technical Report 45). Minneapolis, MN: University of Minnesota, National Center on Educational Outcomes.

Johnstone, C. J., Bottsford-Miller, N. A., & Thompson, S. J. (2006). Using the think aloud method (cognitive labs) to evaluate test design for students with disabilities and English language learners (Technical Report 44). Minneapolis, MN: University of Minnesota, National Center on Educational Outcomes.

Rogers, C. M., Christian, E., & Thurlow, M. L. (2012). A summary of the research on the effects of test accommodations: 2009-2010 (Technical Report 65). Minneapolis MN: University of Minnesota, National Center on Educational Outcomes.

Thurlow, M. L., Lazarus, S. S., & Christensen, L. L. (2008). Role of assessment accommodations in accountability. Perspectives on Language and Literacy, 34(4), 17-20.

Thurlow, M. L., Lazarus, S. S., & Christensen, L. L., (2013). Accommodations for assessment. (94-110). In Lloyd, J.W., Landrum, T.J., Cook, B.G. & Tankersley, M. (Eds.), Research-based practices in assessment. Upper Saddle River NJ: Pearson Education, Inc.

Thurlow, M. L., Lazarus, S. S., Thompson, S. J., & Morse, A. B. (2005). State policies on assessment participation and accommodations for students with disabilities. The Journal of Special Education, 38(4), 232-240.

Thurlow, M. L., Moen, R., Lekwa, A. J., & Scullin, S. B. (2010). Examination of a reading pen as a partial auditory accommodation for reading assessment. Minneapolis, MN: University of Minnesota, Partnership for Accessible Reading Assessment.

Van Someren, M. W., Barnard, Y. F., & Sandberg, J. A. C. (1994). The think-aloud method: A practical guide to modeling cognitive processes. San Diego, CA: Academic Press Ltd.

Zenisky, A. L., & Sireci, S. G. (2007). A summary of the research on the effects of test accommodations: 2005-2006 (Technical Report 47). Minneapolis, MN: University of Minnesota, National Center on Educational Outcomes.

Top of Page | Table of Contents

Appendix A

Top of Page | Table of Contents

Appendix B